2. 武汉大学测绘学院, 湖北 武汉 430079

2. School of Geodesy and Geomatics, Wuhan University, Wuhan 430079, China

遥感卫星从太空观测地球表层的属性特征及时空变化规律,其应用潜力取决于传感器对地物在不同频谱范围反射或发射的电磁能量强度、空间差异和时间变化的探测能力,一般可以用影像的空间分辨率、时间分辨率和光谱分辨率进行表征。如何获取高分辨率的遥感数据,被国际摄影测量与遥感学会列为3大重要挑战之首,被列为我国的16个重大科技专项之一[1]。然而,由于受卫星轨道、观测模式及传感器性能限制,空间、时间与光谱分辨率相互制约,不能同时实现“看得清”“测得快”与“算得准”,难以实现对复杂地学现象空间格局与演变过程的精细刻画[2]。

多源遥感信息融合技术,能够突破单一传感器观测的局限,实现多平台多模态观测数据互补信息的综合处理与应用,生成大场景高“时-空-谱”无缝的观测数据。针对该问题,传统思路主要从模型驱动的角度展开,使用退化过程、物理机制及先验知识构建特定目标的函数模型,并通过函数求解获得最优估计[3]。基于该类方法,针对遥感数据空间、光谱及时间指标的改善已取得了诸多进展,主要包括如下4个方面。

(1) 多时相/多角度融合:主要利用多张影像间因亚像素运动导致的时空冗余信息,将其融合互补产生高质量高分辨率的影像。传统方法包括基于频域的亚像素迁移,以及基于空域的非均匀内插[4]、迭代反投影[5]、凸集投影[6]、正则化法[7]和稀疏编码[8]。

(2) 空-谱融合:主要缓解空间分辨率和光谱分辨率的相互制约,利用多源影像数据间的空-谱互补特性,生成同时具有高空间分辨率和高光谱分辨率的遥感影像。包括全色/多光谱影像融合、全色(或多光谱)/高光谱影像融合,其中,全色(或多光谱)与高光谱影像间波谱范围差异往往较大,融合影像光谱保真相对困难。目前,主流融合方法有成分替换类融合方法[9]、多分辨率分析融合方法[10]、基于变分优化[11]的融合方法等。

(3) 时-空融合:主要缓解时间分辨率和空间分辨率的相互制约,生成时间连续的高空间分辨率遥感影像。主要包括基于单时相影像对辅助的融合方法和基于多时相影像对辅助的融合方法,主流融合算法有基于混合像元分解的融合方法[12]、基于时空滤波的融合方法[13]、基于字典学习[14]、基于贝叶斯[15]的融合方法等。其中,时空自适应反射率融合模型(STARFM)、ESTARFM(enhanced STARFM)等时空滤波融合算法应用较为广泛,如其已在地表反射率、地表温度等多方面得到应用。

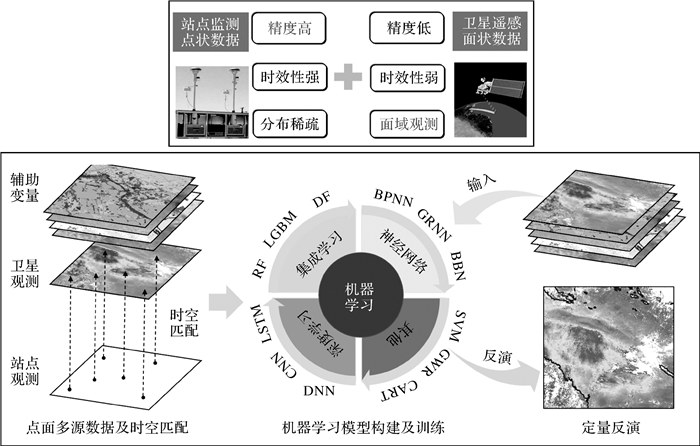

(4) 点-面数据融合:点状数据,如地面站点观测,精度高但分布稀疏;面状数据,如卫星遥感观测,精度相对较低,但覆盖广泛,空间连续。点面融合旨在结合点状和面状数据的优势,获取高精度,广覆盖,空间连续的定量产品。点面融合在地学领域应用广泛,如基于深度信念网络的积雪深度反演模型[16]、基于长短期记忆网络的小时级PM2.5浓度反演模型[17],基于随机森林的降水反演模型[18]等。

总体来看,在改善数据图幅、空间、光谱及时间维度观测能力的问题上,针对遥感影像的类型多样性与成像复杂性,模型驱动的方法具有较强的包容性,能刻画多个数据间的时间、空间和光谱退化关系,但是模型驱动方法大多假设多源观测数据之间仅存在线性退化,难以精确处理幅空、空谱、时空及异质关系的非线性特征,限制了空间不重叠、光谱不覆盖及时空异质情况下的融合精度[19]。

近年来,得益于计算能力和人工智能算法的飞速发展,数据驱动的多源遥感信息融合技术开始涌现。基于数据驱动的方法,无须任何假设和先验知识,通过对大量数据样本信息挖掘,构建输入与输出数据对之间的特征关系,能够获取丰富的统计特征,取得了出色的数据融合结果。因此,本文试图从同质遥感数据融合、异质遥感数据融合及面向定量反演的信息融合3个方面,针对数据驱动的多源遥感信息融合方法进行概括和总结,并梳理目前在该方向所面临的挑战和一些潜在的发展方向。

1 同质遥感数据融合同质遥感数据融合指同一成像手段下的观测数据之间的融合,其中,以可见光-近红外波段成像的光学数据之间的数据融合最为常见。该类技术的主要目的是缓解由于传感器系统技术限制与天-空-地成像条件的影响所产生的单一遥感观测数据空间分辨率、时间分辨率、光谱分辨率等观测指标相互制约,从而获得在时、空、谱分辨率都高质量的遥感数据。它主要包括了多时相/多角度的遥感信息融合、遥感信息空-谱融合及遥感信息时-空融合等。

1.1 多时相/多角度遥感信息融合多时相/多角度遥感信息融合主要指,对存在亚像素位移、场景混叠的多张遥感影像,进行互补信息挖掘与融合,重建出一幅或多幅高质量遥感影像的过程[20]。典型应用场景包括多时相影像融合去云与超分辨率重建。其中超分辨率重建最早可追溯到基于频域的亚像素迁移方法,它证明对多幅影像进行配准与融合为分辨率提升提供了可能[21]。受限于频域法在整合高分辨率影像先验信息上的不足,传统方法逐渐过渡到基于空域的方法。其中典型代表有非均匀内插[4]、迭代反投影[5]、凸集投影[6]、正则化法[7]和稀疏编码[8]。

随着深度学习的兴起,数据驱动的超分辨率方法在求解复杂的非线性反问题上展现出更强的性能。得益于深度神经网络的非线性特征表达能力,可以实现超分辨率问题的深层表征。同时海量遥感数据的出现,也为模型学习提供了训练数据支撑。目前,多时相超分辨率主要通过影像配准与融合重建实现分辨率提升。其中影像配准分为基于光流的方法与基于核的方法;融合主要分为直接融合[22]、基于注意力的融合[23]与多步融合[24]。基于光流的配准[25]显式地发生在影像层面,通过估计待配准影像与参考影像之间的光流,将影像之间由于像素移动产生运动关系编码在光流中。随后使用光流图扭曲待配准影像将其配准到参考影像上。基于核的配准隐式地发生在特征图层面上,通过可学习的卷积核自适应对待配准特征进行卷积处理。代表方法有核回归[26]、可变形卷积[27]、非局部模块[28]等。其中核回归与可变卷积关注影像局部的运动与偏移,通过卷积捕获或跟踪像素的移动,从而使卷积局部感受野中始终可学习出对于重建有益的冗余信息;非局部模块关注像素在全局的响应,通过非局部注意力为全局中的不同区域分配权值。随后的融合负责聚合配准后的时空信息。其中直接融合往往使用简单的卷积对串联后的特征进行非线性映射;基于注意力的融合可调制融合中特征的贡献比例,关注有用信息抑制无用信息;多步融合以多阶段或循环的方式将多时相影像中的信息聚合到参考影像的空间信息上,以确保重建结果与参考目标高度一致。图 1展示了目前基于深度学习的多时相/多角度遥感影像超分辨率重建流程,可见相较于传统方法,数据驱动模型可重建出更多的纹理与细节。

|

| 图 1 多时相/多角度遥感信息融合 Fig. 1 The multi-view super resolution fusion |

1.2 遥感信息空-谱融合

遥感信息空-谱融合,是指通过利用不同遥感影像数据间的空间-光谱信息互补性,生成高空间分辨率、高光谱分辨率的遥感影像,解决因传感器设备条件、成像环境等系统限制导致的遥感影像空间细节和光谱细度相互制约的问题。常见的空-谱融合方法主要涉及3类影数据:全色影像、多光谱影像及高光谱影像,这3类数据的空间分辨率逐级降低,而光谱分辨率逐级提高。

全色-多光谱融合,即通过融合全色影像与多光谱影像得到高质量多光谱遥感数据,是最经典的遥感信息空-谱融合的手段,这一概念早在20世纪80年代就被提出[9-11]。随着星载遥感技术的蓬勃发展,多空间、多光谱联合对地观测格局趋于成形,全色-多光谱融合技术得到了快速发展,并不断提出大量方法[29-35]。总体而言,目前传统的全色-多光谱融合算法主要可以分为4类:基于成分替换的融合方法[36-40]、基于多分辨率分析的融合方法[41-44]、基于模型优化的融合方法[45-48]、基于机器学习的融合方法[49-50]。其中,基于成分替换的方法空间细节更优,光谱畸变严重[51];而基于多分辨率分析的方法光谱保真更优,空间细节欠佳[52]。文献[53]考虑两类融合方法的优势,提出了成分替换与多分辨率分析联合的融合框架。基于模型优化的算法是将融合问题看作一个逆问题,对逆问题施加不同的先验约束并求解,相比基于成分替换和多分辨率分析的融合方法,其数学模型更为严谨,精度更高,但是其精度严重依赖先验知识的准确度,且求解复杂,运算时间较高。基于机器学习的融合方法最早是基于稀疏表达或者字典学习[54-55],其思想是通过大量样本学习完备光谱字典,然后在全色及多光谱影像上计算字典与相应稀疏系数,研究字典和对应系数之间的关系实现空-谱信息的融合。

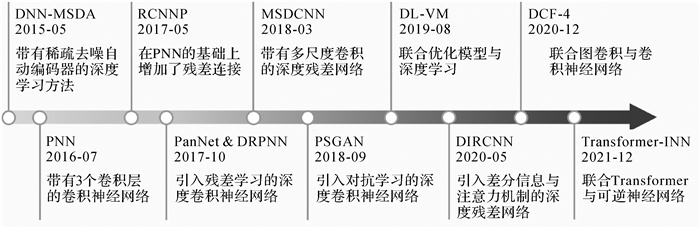

自2015年以来,深度学习在全色-多光谱融合中逐渐得到关注,如图 2所示。其主要思想是通过搭建不同的网络直接学习低、高空间分辨率遥感数据之间的非线性关系。基于深度学习的全色-多光谱融合算法最早在2015年被学者提出,当时使用的只是简单的带有稀疏去噪自编码器的深度神经网络[56],紧接着,更适合图像处理的卷积神经网络被用到了全色-多光谱融合中[57]。随着残差网络的兴起,又有学者在卷积神经网络的基础上增加残差连接[58],或者引入残差学习[59-60]。然而,单一的卷积无法完全挖掘遥感影像中的多尺度信息,文献[61]在深度残差网络中引入了多尺度卷积,更好地增加模型的特征挖掘能力。虽然带有多尺度卷积的残差网络已经能够取得优于传统算法的细节,有学者将图像生成领域的对抗学习引入到卷积神经网络中,进一步提高图像细节。除此之外,考虑到深度学习的不可解释性及优化模型求解困难的问题,学者们提出了联合优化模型和深度学习的全色-多光谱融合算法[62]。为了帮助网络更好地学习高低空间分辨率遥感影像之间的非线性关系,近年来,学者们更多地顾及遥感影像块与块之间的关系,并且采用不同的模块加以解决,如注意力机制[63]、图卷积[64]及带有多头注意力的Transformer[65]等。图 3展示了深度学习算法和传统基于模型算法的全色-多光谱融合效果差异,数据驱动的融合算法在细节注入上相比基于模型的算法更有优势。

|

| 图 2 深度学习算法在全色-多光谱融合中的发展 Fig. 2 Development of deep learning in pan-sharpening |

|

| 图 3 遥感信息空-谱融合 Fig. 3 Spatial-spectral fusion |

除全色-多光谱融合之外,全色-高光谱融合[66-68]及多光谱-高光谱融合[69-72]也受到了越来越多的关注。这两类融合的大多数算法都是由全色-多光谱融合算法衍生而来[73-75]。除此之外,学者们还利用3D卷积进行高光谱影像特征挖掘从而提高融合准确度[76],有的学者则是联合Laplacian方法进行空间细节的增强[77]。与全色-多光谱融合相比,涉及高光谱遥感影像数据的融合更为困难,因为全色影像与高光谱影像之间的空间分辨率差异更大,此外,光谱尺度的差异也增加了数倍。因此,如何通过有效的手段或者辅助数据,缓解二者之间直接融合的尺度鸿沟,仍然值得广大研究者深思。

1.3 遥感信息时-空融合遥感信息时-空融合是指将时间分辨率高的低空间分辨率图像与时间分辨率低的高空间分辨率图像融合,生成具有足够时空分辨率的图像序列。在过去的10年中,遥感信息时-空融合引起了大量的关注,学者们发展了许多时-空融合方法,主要可以归为以下几类:基于时空滤波的融合方法[78]、基于混合像元分解的融合方法[79]、基于学习的融合方法[80]、基于贝叶斯的融合方法[81]。其中,基于时空滤波的方法在时-空融合方法中应用十分广泛,文献[82]首次使用了时空自适应反射融合模型(STARFM),而后不断有学者对其进行发展,如引入穗帽变换[83]计算高低分辨率影像之间的转换系数[84],联合回归克里金法[85]增加双边滤波确定权重[86],使用核驱动进行加权组合[87],使用非局部滤波[88-89]等。基于混合像元分解的时-空融合方法最早由文献[90]在1999年首次提出,经过学者们不断引入新的约束和辅助信息[90-96],逐渐形成了稳健的基于混合像元分解的时-空融合体系。文献[97]使用稀疏表示解决遥感信息时-空融合,揭开了基于学习的时-空融合方法的序幕,后续学者不断地探索,提高所学的字典的完备度和精度,衍生出了一系列基于字典学习的时-空融合算法[98-99]。

此外,部分基于学习的融合算法没有依赖于字典学习,而是使用深度学习来解决遥感信息时-空融合[100-101]。最后一类是通过构建融合的逆问题,将融合问题转化为最优估计问题,使用贝叶斯最大后验估计进行求解,各个算法的差别就在于逆问题模型的侧重不同及采用的优化算法不同[102-103]。总体来说,基于学习和基于贝叶斯的算法是后起之秀,虽然目前能取得不错的结果,但是在长时序遥感数据中,能否保持稳健性,还需要经过大量实践来验证。

2 异质观测数据融合异质观测数据融合是近年来的研究热点之一,其主要目的是利用获取方式不同的异质观测数据所提供的多维度地物信息,对地球观测数据进行更精确的解读。本节将对异质遥感影像和时空大数据信息的融合进行详细介绍。

2.1 多源异质遥感数据融合不同遥感数据成像方式不同,例如SAR影像和LiDAR影像是通过主动遥感的方式成像,光学遥感影像是通过被动遥感的方式成像。同一地物在不同成像方式下的遥感影像中表现不同,从而形成更多维度的数据。多源异质遥感数据融合主要是整合异质遥感数据的多维特征,对影像中的信息进行更充分的利用和更精准的解读。

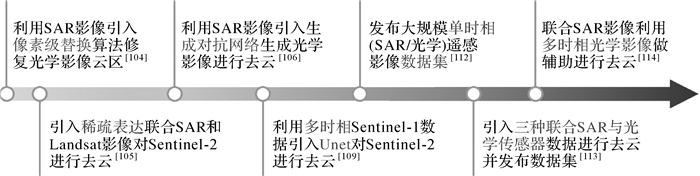

在SAR和光学遥感影像融合研究中,主要以SAR影像为辅助的光学数据修复为代表。有学者率先利用多频SAR影像通过像素级替换的算法对同一地区光学影像的云区进行修复,有效地提高了光学影像的利用效率和地物信息解读的准确性[104];进一步通过稀疏表达,利用单频SAR影像和对应的Landsat影像对哨兵2号影像的云区进行修复[105];此外,学者们还提出了利用生成对抗网络首先完成SAR到光学的转变,而后对云区进行填补的修复流程[106],后续又有多个相关工作[107-108];利用U-net结构的卷积神经网络,将多时相哨兵1号和哨兵2号数据引入目标哨兵2号数据的修复任务中,得到了更加精确的修复结果[109],后续也有相关的工作[110-111]。值得一提的是,以上深度学习算法的网络是利用特定时间特定区域的数据进行训练,无法应用在其他时间和场景。为了使预训练模型具备更强的泛化能力,有学者公布了一组大规模的单时相SAR-光学数据集[112];还有学者则是引入Landsat辅助数据, 公布了三元组的数据集,每个三元组包括了已配对的哨兵1号、哨兵2号和Landsat-8数据[113];进一步引入多时相的哨兵2号数据为辅助,公布了配对的多时相哨兵1号、哨兵2号数据集,以完成光学影像的修复工作[114]。图 4是利用SAR数据为参考进行光学数据修复的大致发展历程。图 5是利用SAR影像为参考的光学影像去云结果,可以看出SAR影像足以完成对光学信息的修复。

|

| 图 4 SAR数据辅助光学数据修复的发展历程 Fig. 4 Development of optical data recovery assisted by SAR data |

|

| 图 5 利用SAR数据进行光学修复的实例 Fig. 5 The example of optical data recovery with SAR data |

对影像信息更精准解读的融合主要以地物分类任务为代表,在光学数据分类的基础上通过补充主动遥感得到的数据的特征能够实现更加精确的地物分类。首先,学者们通过传统的极大似然法和人工神经网络对Landsat TM光学数据、ALOS可见光和近红外数据及PALSAR数据进行地物信息提取,相比单使用光学影像更加精确的分类结果[115];而后,学者利用IHS变换和小波变换对MODIS数据和RADASAT-2数据进行融合,其融合结果更容易被传统的ISODATA等算法分类[116];接着,有学者利用随机森林对哨兵1号SAR数据和Landsat-8光学数据进行种植区提取,发现利用异质数据进行分类的结果远高于利用单一数据的分类结果[117],其后续也有众多相似的机器学习算法工作,如支持向量机[118]、策树[119]等;此外,学者们还提出基于深度学习堆叠稀疏自编码器模型,利用LiDAR数据,光学数据和SAR数据进行生物量的估计,其结果比传统算法和机器学习算法更加准确[120],后续相关工作包括使用卷积神经网络[121]、U-net网络[122]、深度同质特征融合[123]等。同时,结合异质数据得到的影像分类结果远好于单独使用光学数据的分类结果。

2.2 遥感观测与时空大数据融合由于遥感数据获取的周期较长,单独利用遥感数据无法达到对地面事件实时监测的目的。而包含社交媒体数据在内的时空大数据则能够实时有效地反映现实状况。遥感观测与时空大数据融合主要目的是充分利用遥感数据的大范围特性和社交媒体数据的实时特性,完成对地面事件的大范围实时监测。近年来,遥感观测与时空大数据融合得到了较为深入的研究。

基于决策树算法,通过收集推特热点内容并匹配对应区域遥感影像,实现了对洪水等灾害的精准有效监测[124],后续工作在以上数据的基础上又增加了地形数据[125]及维基数据[126];而后,有学者发现使用深度神经网络对遥感影像城市区域进行分类时,加入与像素匹配的社交媒体数据作为辅助将会得到精度更高的分类结果[127],后续仍有类似工作[128-130];利用深度学习对遥感影像和社交媒体数据进行融合分析,准确估计了城市功能区划分[131-132];通过对夜光遥感数据和社交媒体数据进行分析,得到城市日尺度内人口的动态变化[133],在后续的工作中又进一步分析出了城市的社会经济情况[134]。

3 面向定量反演的信息融合将卫星遥感观测转化为地表参量信息,需要经过定量反演[135]。然而,受到观测技术(光学、雷达、激光雷达等)和观测环境(光源、云、气溶胶等)等因素的影响[136]。基于单一遥感数据源反演得到的定量产品,往往具有较大的局限性。因此,挖掘异源数据之间的互补特性,融合多源信息,改善定量反演产品的质量,具有重要的意义。

3.1 点-面数据融合的基本原理和框架传统的地球环境监测,依赖于地面观测站点的建设,例如气象站点、海洋观测站点、生态站点、大气环境监测站点等。地面站点数据是一种点状数据,具有精度高、时效性高、稳定性好,但分布稀疏、监测范围有限、建设成本较高的特点。卫星遥感,作为一种新兴的观测技术,采用对地成像的方式对地球环境进行观测,可以得到面状的观测数据。其具有大面积、广覆盖、连续观测的优势,与站点观测很好地形成了优势互补[137]。因此,融合点状数据和面状数据(点-面融合),是获取高精度、大范围、空间连续的定量产品的有效方法[138]。

点-面数据融合的基本思路是构建关系模型,实现从面状数据到点状数据的映射。融合模型可以分为物理模型和统计模型两大类。物理模型主要从数据本身的物理关系出发建立推导过程,而统计模型则是主要利用机器学习等方法构建点状和面状数据之间的统计关系[139]。统计模型具有简单、高效、准确的特点,其中,受益于计算机、人工智能等技术的发展,基于机器学习的统计模型表现出了优越的性能,得到了迅速发展和广泛应用[140]。

3.2 点-面数据融合机器学习模型基于机器学习模型的点-面数据融合基本流程如图 6所示,主要包括3大步骤:点面多源数据时空匹配、机器学习模型构建及训练、定量反演。由于站点数据往往具有较高的精度,因此,在机器学习融合模型中往往被用作标签数据。点-面数据融合常用的机器学习方法种类繁多。早期,线性回归(如多元线性回归、地理加权回归(GWR))、决策树模型(如CART),支持向量机(SVM)等方法应用较多。近年来,随着计算能力的提升和神经网络技术的发展,集成学习(如随机森林(RF)、轻量级梯度提升树(LGBM)深度森林(DF))、神经网络(如反向传播神经网络(BPNN)、广义回归神经网络(GRNN)、贝叶斯信念网络(BBN))、深度学习(如深度神经网络(DNN)、卷积神经网络(CNN)、长短期记忆神经网络(LSTM))方法得到了更为广泛的应用[141]。点状标签数据作为输出,卫星观测及其他辅助变量作为输入,进行机器学习模型的训练。输入面状数据到训练好的模型,即可得到点面融合后的定量产品。该产品同时具有点状数据高精度和面状数据广覆盖的优点。

|

| 图 6 点-面数据融合的基本原理及基于机器学习的点面融合定量反演流程 Fig. 6 Basic principle of point-surface data fusion and workflow for the quantitative inversion process of machine learning based point-surface fusion |

基于机器学习的点-面数据融合模型具有精度高、适用范围广等优势,被广泛地应用于各个领域。本文列举了水圈、大气圈、生物圈的一些典型的点-面融合机器学习模型的实例,以阐述点面融合在定量反演领域的应用。

3.3 水圈在全球水循环中,土壤湿度发挥了至关重要的作用[142]。对土壤湿度进行精确反演能够为洪水预报[143]、干旱监测[144]等提供高效决策参考。机器学习能够在对物理过程了解有限的情况下简化不适定的反演问题,因而在土壤湿度反演中表现出巨大优势。虽然,传统的基于ANN的模拟亮温或后向散射系数反演土壤湿度的方法诞生最早,其准确性、稳定性和有效性也已得到验证[145],但是它没考虑到站点数据的特点。关注到站点实测在小尺度范围的高精度特性,采用基于站点观测训练校准ANN的方式扩大了训练数据集[146]。

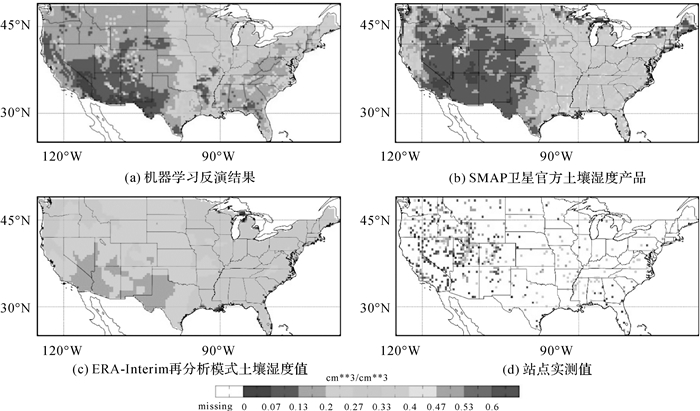

然而,站点的点-面融合方式受到空间尺度不匹配问题和全球土壤湿度观测网的稀疏分布的掣肘。为解决这一问题,在土壤湿度反演训练数据中加入全球地表模型(LSM)模拟数据的反演方法被提出并得到发展[147]。与此同时,关注到GNSS-R数据的高时空重访和成本低廉特点,基于GNSS-R反演土壤湿度的点-面融合反演算法得到了进一步扩充[148-151]。文献[152]进一步提出了基于可靠站点筛选的GRNN反演方法,使星地空间尺度误差得到有效削弱,从而得到更高保真度的无缝土壤湿度产品。另外,XGBoost、RF等集成学习方法在土壤湿度融合反演任务中也有优秀的表现[153]。图 7为利用基于GRNN点-面融合框架进行土壤湿度反演的实例,明显地反映出机器学习方法在点-面融合进行反演土壤湿度产品上的优势。

陆地积雪是影响气候变化的关键因素之一[154],积雪表面的高反射率和其本身的低导热率会对地表辐射平衡与能量交换起一定的控制作用。积雪研究中的两个主要因子分别为积雪深度与雪水当量,在这两个因子的反演中,机器学习模型能将星地观测数据进行点-面融合,并取得较为良好的效果。较早的一些研究表明,人工神经网络在基于点面融合的雪深与雪水当量反演中能取得良好效果[156-158]。而后有学者研究证明,增加面状微波通道数据并融合经纬度与地形等多源数据,可使神经网络的反演精度进一步提升[159-161]。文献[162]对比了雪深经验算法与BPNN、GRNN和DBN 3种神经网络在点-面融合中的效果,发现神经网络的反演精度显著优于传统雪深算法, DBN雪深反演的网络结构如图 8所示,图 9则展示反演结果的年际异常分析。而集成学习作为另一类机器学习模型,在基于点面融合的积雪参数反演中也有不俗的表现。文献[163—164]将随机森林与HUT积雪物理模型相结合,改善模型在浅雪与深雪处的融合反演精度。为了反演大范围、长时序与高空间分辨率的积雪参数,文献[165]利用支持向量回归方法,融合地面站点数据、MODIS地表覆盖数据与被动微波数据生成了近25 a的北半球逐日积雪深度数据。也有学者考虑在机器学习输入变量中进一步融合积雪同化数据,从而得到具有更高准确性的北半球雪深数据集[166]。

此外,降水也是水循环的重要组成部分,降水过程具有复杂性、非线性和高度可变性等特点。传统雨量计和地基雷达等站点可以获得可靠且准确的地面降水数据[167-168]。然而,它们的分布并不均匀,尤其是对于大部分海洋地区和地形复杂的山区[169-170]。相对于地面站点,卫星观测可以不受地理条件的限制,实现大范围且时间连续的观测,恰好能弥补地面观测存在的缺陷[167-170]。根据融合的卫星数据不同,基于点-面融合的降水反演方法大致可以分为3大类:基于可见光/红外、基于主动/被动微波和多传感器联合反演方法[167-173]。融合红外数据的反演方法是通过构建云顶温度和地表降雨率之间的关系来估算降水量[18]。文献[18]融合了Himawari-8中的红外信息及数值天气预报数据,构建了一个基于随机森林的降水反演模型。与红外相比,微波对降水颗粒更加敏感,因此往往能取得相对较高的精度[174-176]。然而,单一传感器仍存在一定的局限性,研究人员开始尝试联合多传感器,以获得高质量的全球降水产品,如:IMERG产品[177],GSMaP[178-180]和CMORPH产品[181-182]。此外,RF-MEP模型[183]将现有的产品与地面站点和地形要素相融合,可以得到局部区域内更准确的降水估算结果[184-192]。

3.4 大气圈大气圈主要由多种气体组成,比如痕量气体和CO2。近年来,人类向大气中排放的污染成分含量和种类逐年增加,对人体健康和生态系统造成严重危害。大气运动产生一系列大气现象,如飓风、野火和沙尘。这些大气灾害会对人类健康和经济造成不利影响。因为不同气体遥感观测光谱吸收特征之间存在差异及遥感图像可以识别大气灾害,所以可以从遥感数据中反演得到这些气体参数和大气现象。传统机器学习和深度学习已经在星地数据融合反演大气圈参数中取得了巨大的成功,明显优于传统方法。本节重点介绍数据驱动的点-面融合技术在大气污染物监测与制图方面的进展。

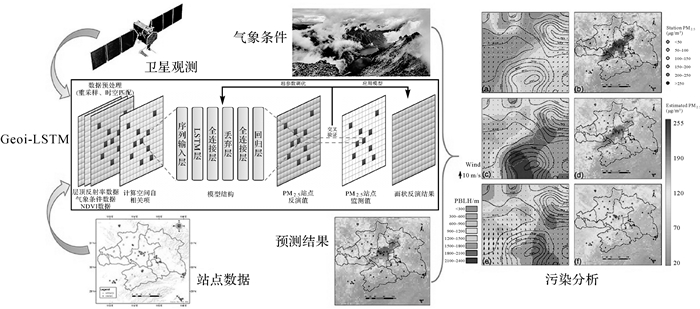

气溶胶通常通过气溶胶光学厚度(AOD)来表示。数据驱动的气溶胶遥感反演通过融合包括多个波段的卫星辐射影像及其他辅助变量,如角度信息、地形和气象条件。文献[193]构建了一个反演AOD的神经网络模型,并使用了亮度温度和AERONET站点值。文献[194]也进行了类似的尝试,提出了一种深度神经网络来融合估算AOD,其精度优于物理模型和传统机器学习模型。由于气溶胶中含有颗粒物(PM),卫星反演的AOD是卫星遥感近地面PM反演中广泛使用的参数。文献[195]融合了卫星和气象数据、地理数据和地面测量,分别利用随机森林、极端梯度增强和深度神经网络来预测PM2.5,3种方法的表现相似。文献[196]利用深度置信网络,精确模拟了PM2.5和AOD、气象条件、植被分布之间的关系,并且考虑了PM2.5观测在时间和空间上的强相关性,最终实现了高精度的高分辨率PM2.5反演。文献[17]利用LSTM模型融合葵花8号TOA、气象数据、NDVI和PM2.5之间的时空特征来反演高时间分辨率的PM2.5,如图 10所示。

在数据驱动下的大气痕量气体监测的研究中,不少研究融合利用卫星观测的大气柱或剖面数据来反演痕量气体浓度[197-199]。文献[200]额外引入哨兵5号TROPOMI的柱产品结合GEOS-FP数据,利用轻梯度增强模型(LightGBM)分别估算了中国地区高分辨率全覆盖的O3、CO和NO2。文献[201]通过TROPOMI柱数据和数值模式输出的耦合,利用RF来估算地面SO2浓度。此外,卫星遥感还可以有效地观测对流层的臭氧前体,这些数据可以用来估计地表臭氧浓度。文献[202]提出了一种时空嵌入深度残差学习模型(STE-ResNet),通过整合地面站点测量数据、卫星O3前体数据、再分析数据和排放数据,获取每日高分辨率地表O3浓度数据。

此外,数据驱动方法也被广泛应用于大气灾害监测[203]。RNN和ConvLSTM等时间序列预测模型可以融合站点气候数据和面状卫星观测,分析飓风行为,预测飓风路径[204-205]。深度学习可以结合再分析数据和卫星图像评估飓风损失[206]。越来越多的机器学习方法被用于改进火灾探测和预测,分类和绘制野火严重程度图,在卫星图像上自动探测野火[207],以及检测各种灰尘的来源、运输和风蚀敏感性[208-211]。

总体而言,点-面融合机器学习模型反演大气圈参数取得了一定的成果,逐渐尝试估算高分辨率的产品,更优化地考虑时空特征和时空异质性。然而,其在大气圈垂直结构中的应用却很少。另外,数据驱动的方法忽视内在机理,弱化因果关系,可解释性差,是阻碍该方向发展的瓶颈问题。

3.5 生物圈生物量、叶面积指数、植被覆盖度及叶绿素等是评估生物圈生态平衡的重要参数[212]。仅利用遥感数据推算得到植被参数(如归一化植被指数),能够获取的信息有限,精度也仍有较大的提升空间。利用点-面融合定量反演,则可以获取更为丰富和准确的植被参数。目前,已有诸多研究利用支持向量回归、高斯过程回归、核岭回归、神经网络、随机森林、贝叶斯网络、深度学习等机器学习方法,结合多光谱、高光谱遥感数据和现场实测的植被数据,实现了对植被各类生化参数的准确估算[213-215]。研究结果显示,基于机器学习的点-面融合反演,极大地规避了传统现场实测方法成本高、破坏性强的缺点。与此同时,相比单一面状数据的反演结果,机器学习强大的非线性拟合能力,又取得了更高的反演精度。

除了植被生化参数的反演外,基于机器学习的点面融合定量反演也被用于地表物候的监测。例如文献[216]利用MODIS和美国国家物候监测网络的数据,通过神经网络和随机森林模型,实现了物候反演。结果显示,机器学习的方法比传统方法取得了更优的结果。除光学数据外,利用雷达数据的点面融合反演同样被用于物候反演。文献[217]利用随机森林算法、Radarsat-2卫星数据及SMAPVEX16-MB计划期间的现场实验测量数据,反演了加拿大马尼托巴省的油菜、玉米、大豆和小麦等农作物的物候。

4 多源遥感数据融合面临的问题和挑战要深刻理解地球表层复杂的自然与人文现象,需要综合、完整和持续的地球观测系统。尽管遥感对地观测系统的空间、时间与光谱分辨率不断提升,但仍然不能完全满足人们对复杂地学过程及其内在机制进行深入理解与认知的需求。因此,新型卫星传感器仍然在不断革新之中,而如何针对不断涌现的新型对地观测数据,进一步完善发展时空谱一体化融合的理论与方法,是该研究不断向前推进的重要方向。另外,随着遥感观测卫星越来越多,数据量也越来越大,而一些地学应用如灾害应急、环境监测等对时效性具有较高的要求,如何挖掘现有计算资源的运算能力,形成海量数据的实时传输、处理、分析与应用,是提升该项目研究成果应用潜力的重要途径。

总体来看,在改善数据图幅、空间、光谱及时间维度的问题上,针对遥感影像的类型多样性与成像复杂性,模型驱动的方法具有较强的包容性,能刻画多个数据间的时间、空间和光谱退化关系,但难以精确处理幅空、空谱、时空及异质关系的非线性特征,限制了空间不重叠、光谱不覆盖及时空异质情况下的融合精度。基于数据驱动的方法,尤其是深度学习通过构建复杂的、多层的网络获得数据间的大量特征关系,具有强大的非线性处理能力,但过于依赖数据质量和数量、缺乏成像的物理退化过程导致结果不够稳健,对于稀疏、低分的数据中的特征不够敏感,仍存在一些难点问题亟待深入研究。因此,建立能够综合数据退化机制与统计信息的框架,从多维信息中准确提取有效特征,是提升融合精度十分必要的新途径。针对数据驱动的遥感信息融合模型,未来的主要研究方向包括:

(1) 研究轻量化网络,提升融合效率。现有的数据驱动算法在融合效果上的显著提高,是以大量的模型参数及计算量作为代价的,这极大地限制了多源遥感数据的融合效率。因此,如何提出兼备模型复杂度和融合效果的轻量化网络是多源遥感信息融合发展的一大难点。

(2) 数据驱动和模型驱动结合。数据驱动具有高效,无须人工先验等优势,而模型驱动则具有泛化包容性强,具有物理可解释性等优点。如何打破局限,搭建两者之间互通的桥梁,实现数据驱动与模型驱动相结合的多源遥感信息融合算法也是重中之重。

(3) 模型泛化能力。数据驱动融合算法能够快速适应传感器变化的前提是,构建不同传感器的完备训练集并针对问题重新训练。导致这一现象的原因就在于数据驱动算法的泛化能力弱,难以同时兼顾不同传感器的退化特征。如何提高数据驱动算法的模型泛化能力,提出多源遥感信息融合统一框架迫在眉睫。

(4) 在轨实时融合处理。目前的数据驱动算法需要强大的算力和硬件作为支撑,然而,随着空天地遥感监测网的日益完善,卫星影像数据在轨优化显得十分重要,如何开发出能在有限载荷的星上平台运行的多源遥感信息融合算法也是未来的重要方向。

5 结论数据驱动的多源遥感信息融合技术,可以通过在海量遥感数据中挖掘数据特征,学习特征关系,从而实现多源观测数据互补信息的有效耦合。本文从同质遥感数据融合、异质遥感数据融合及点-面融合3个领域入手,总结了数据驱动的多源遥感信息融合技术的发展历程。从简单的成分替换、多分辨率分析、到深度学习、图卷积、Transformer;从单时相、单模态到多时相、多模态。虽然目前数据驱动的融合算法在融合效果、定量指标等结果上取得了不错的领先,但是数据驱动的算法忽视物理意义,对训练数据质量具有较强的依赖性;模型驱动的算法虽然依赖人工调参,先验表达有限,但是泛化迁移能力强。因此,未来可以通过模型推导帮助网络搭建,或者是网络先验来优化模型先验表征等方式来实现数据驱动与模型驱动的耦合。当然,如此又会引出新的挑战:哪些模型相互结合是最高效的?哪种结合途径是最便捷的?除此之外,只考虑改进数据驱动算法架构,如何实现模型轻量化及提高模型迁移泛化能力,也是未来发展的重要方向。

| [1] |

杨景辉. 遥感影像像素级融合通用模型及其并行计算方法[J]. 测绘学报, 2015, 44(8): 943. YANG Jinghui. The generalized model and parallel computing methods for pixel-level remote sensing image fusion[J]. Acta Geodaetica et Cartographica Sinica, 2015, 44(8): 943. DOI:10.11947/j.AGCS.2015.20150059 |

| [2] |

童庆禧, 张兵, 张立福. 中国高光谱遥感的前沿进展[J]. 遥感学报, 2016, 20(5): 689-707. TONG Qingxi, ZHANG Bing, ZHANG Lifu. Current progress of hyperspectral remote sensing in China[J]. Journal of Remote Sensing, 2016, 20(5): 689-707. |

| [3] |

孙锐, 荣媛, 苏红波, 等. MODIS和HJ-1CCD数据时空融合重构NDVI时间序列[J]. 遥感学报, 2016, 20(3): 361-373. SUN Rui, RONG Yuan, SU Hongbo, et al. NDVI time-series reconstruction based on MODIS and HJ-1 CCD data spatial-temporal fusion[J]. Journal of Remote Sensing, 2016, 20(3): 361-373. |

| [4] |

LERTRATTANAPANICH S, BOSE N K. High resolution image formation from low resolution frames using Delaunay triangulation[J]. IEEE Transactions on Image Processing, 2002, 11(12): 1427-1441. DOI:10.1109/TIP.2002.806234 |

| [5] |

IRANI M, PELEG S. Improving resolution by image registration[J]. CVGIP: Graphical Models and Image Processing, 1991, 53(3): 231-239. DOI:10.1016/1049-9652(91)90045-L |

| [6] |

STARK H, OSKOUI P. High-resolution image recovery from image-plane arrays, using convex projections[J]. Journal of the Optical Society of America A, 1989, 6(11): 1715-1726. DOI:10.1364/JOSAA.6.001715 |

| [7] |

FARSIU S, ROBINSON M D, ELAD M, et al. Fast and robust multiframe super resolution[J]. IEEE Transactions on Image Processing, 2004, 13(10): 1327-1344. DOI:10.1109/TIP.2004.834669 |

| [8] |

YANG Jianchao, WRIGHT J, HUANG T S, et al. Image super-resolution via sparse representation[J]. IEEE Transactions on Image Processing, 2010, 19(11): 2861-2873. DOI:10.1109/TIP.2010.2050625 |

| [9] |

SCHOWENGERDT R A. Reconstruction of multispatial, multispectral image data using spatial frequency content[J]. Photogrammetric Engineering and Remote Sensing, 1980, 46(10): 1325-1334. |

| [10] |

HALLADA W A, COX S. Image sharpening for mixed spatial and spectral resolution satellite systems[C]//Proceedings of the 17th International Symposium on Remote Sensing of Environment. Ann Arbor: IOP, 1983.

|

| [11] |

CLICHE G, BONN F, TEILLET P. Integration of the SPOT panchromatic channel into its multispectral mode for image sharpness enhancement[J]. Hotogrammetric Engineering & Remote Sensing, 1985, 51(3): 311-316. |

| [12] |

SHEN Huanfeng, WU Penghai, LIU Yaolin, et al. A spatial and temporal reflectance fusion model considering sensor observation differences[J]. International Journal of Remote Sensing, 2013, 34(12): 4367-4383. DOI:10.1080/01431161.2013.777488 |

| [13] |

WU Mingquan, NIU Zheng, WANG Changyao, et al. Use of MODIS and Landsat time series data to generate high-resolution temporal synthetic Landsat data using a spatial and temporal reflectance fusion model[J]. Journal of Applied Remote Sensing, 2012, 6(1): 063507. DOI:10.1117/1.JRS.6.063507 |

| [14] |

LI Dacheng, LI Yanrong, YANG Wenfu, et al. An enhanced single-pair learning-based reflectance fusion algorithm with spatiotemporally extended training samples[J]. Remote Sensing, 2018, 10(8): 1207. DOI:10.3390/rs10081207 |

| [15] |

XUE Jie, LEUNG Y, FUNG T. A Bayesian data fusion approach to spatio-temporal fusion of remotely sensed images[J]. Remote Sensing, 2017, 9(12): 1310. DOI:10.3390/rs9121310 |

| [16] |

YANG J W, JIANG L M, LEMMETYINEN J, et al. Improving snow depth estimation by coupling HUT-optimized effective snow grain size parameters with the random forest approach[J]. Remote Sensing of Environment, 2021, 264: 112630. DOI:10.1016/j.rse.2021.112630 |

| [17] |

WANG Bin, YUAN Qiangqiang, YANG Qianqian, et al. Estimate hourly PM2.5 concentrations from Himawari-8 TOA reflectance directly using geo-intelligent long short-term memory network[J]. Environmental Pollution, 2021, 271: 116327. DOI:10.1016/j.envpol.2020.116327 |

| [18] |

MIN Min, BAI Chen, GUO Jianping, et al. Estimating summertime precipitation from Himawari-8 and global forecast system based on machine learning[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(5): 2557-2570. DOI:10.1109/TGRS.2018.2874950 |

| [19] |

黄波, 赵涌泉. 多源卫星遥感影像时空融合研究的现状及展望[J]. 测绘学报, 2017, 46(10): 1492-1499. HUANG Bo, ZHAO Yongquan. Research status and prospect of spatiotemporal fusion of multi-source satellite remote sensing imagery[J]. Acta Geodaetica et Cartographica Sinica, 2017, 46(10): 1492-1499. DOI:10.11947/j.AGCS.2017.20170376 |

| [20] |

张良培, 沈焕锋. 遥感数据融合的进展与前瞻[J]. 遥感学报, 2016, 20(5): 1050-1061. ZHANG Liangpei, SHEN Huanfeng. Progress and future of remote sensing data fusion[J]. Journal of Remote Sensing, 2016, 20(5): 1050-1061. |

| [21] |

TSAI R. Multiframe image restoration and registration[J]. Advance Computer Visual and Image Processing, 1984, 1: 317-339. |

| [22] |

TIAN Yapeng, ZHANG Yulun, FU Yun, et al. TDAN: temporally-deformable alignment network for video super-resolution[C]//Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Seattle, WA, USA: IEEE, 2020: 3360-3369.

|

| [23] |

SALVETTI F, MAZZIA V, KHALIQ A, et al. Multi-image super resolution of remotely sensed images using residual attention deep neural networks[J]. Remote Sensing, 2020, 12(14): 2207. DOI:10.3390/rs12142207 |

| [24] |

SONG Huihui, XU Wenjie, LIU Dong, et al. Multi-stage feature fusion network for video super-resolution[J]. IEEE Transactions on Image Processing, 2021, 30: 2923-2934. DOI:10.1109/TIP.2021.3056868 |

| [25] |

SHI Wenzhe, CABALLERO J, HUSZÁR F, et al. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network[C]//Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, USA: IEEE, 2016: 1874-1883.

|

| [26] |

TAKEDA H, FARSIU S, MILANFAR P. Kernel regression for image processing and reconstruction[J]. IEEE Transactions on Image Processing, 2007, 16(2): 349-366. DOI:10.1109/TIP.2006.888330 |

| [27] |

XIAO Yi, SU Xin, YUAN Qiangqiang, et al. Satellite video super-resolution via multiscale deformable convolution alignment and temporal grouping projection[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 1-19. |

| [28] |

YI Peng, WANG Zhongyuan, JIANG Kui, et al. Progressive fusion video super-resolution network via exploiting non-local spatio-temporal correlations[C]//Proceedings of 2019 IEEE/CVF International Conference on Computer Vision. Seoul, Korea: IEEE, 2019: 3106-3115.

|

| [29] |

WANG Zhijun, ZIOU D, ARMENAKIS C, et al. A comparative analysis of image fusion methods[J]. IEEE Transactions on Geoscience and Remote Sensing, 2005, 43(6): 1391-1402. DOI:10.1109/TGRS.2005.846874 |

| [30] |

ALPARONE L, AIAZZI B, BARONTI S, et al. Remote sensing image fusion[M]. Boca Raton, FL: CRC Press, 2015.

|

| [31] |

THOMAS C, RANCHIN T, WALD L, et al. Synthesis of multispectral images to high spatial resolution: a critical review of fusion methods based on remote sensing physics[J]. IEEE Transactions on Geoscience and Remote Sensing, 2008, 46(5): 1301-1312. DOI:10.1109/TGRS.2007.912448 |

| [32] |

AIAZZI B, ALPARONE L, BARONTI S, et al. Twenty-five years of pansharpening: a critical review and new developments[M]//CHEN C H. Signal and Image Processing for Remote Sensing. 2nd ed. Boca Raton, FL: CRC Press, 2012: 533-548.

|

| [33] |

VIVONE G, ALPARONE L, CHANUSSOT J, et al. A critical comparison among pansharpening algorithms[J]. IEEE Transactions on Geoscience and Remote Sensing, 2015, 53(5): 2565-2586. DOI:10.1109/TGRS.2014.2361734 |

| [34] |

LONCAN L, DE ALMEIDA L B, BIOUCAS-DIAS J M, et al. Hyperspectral pansharpening: a review[J]. IEEE Geoscience and Remote Sensing Magazine, 2015, 3(3): 27-46. DOI:10.1109/MGRS.2015.2440094 |

| [35] |

HASANLOU M, SARADJIAN M R. Quality assessment of pan-sharpening methods in high-resolution satellite images using radiometric and geometric index[J]. Arabian Journal of Geosciences, 2016, 9(1): 45. DOI:10.1007/s12517-015-2015-0 |

| [36] |

JIANG Dong, ZHUANG Dafang, HUANG Yaohuan, et al. Survey of multispectral image fusion techniques in remote sensing applications[M]//ZHENG Yufeng. Image Fusion and Its Applications. [S. l. ]: IntechOpen, 2011: 1-23.

|

| [37] |

TU Teming, CHENG Wenchun, CHANG C P, et al. Best tradeoff for high-resolution image fusion to preserve spatial details and minimize color distortion[J]. IEEE Geoscience and Remote Sensing Letters, 2007, 4(2): 302-306. DOI:10.1109/LGRS.2007.894143 |

| [38] |

KLONUS S, EHLERS M. Performance of evaluation methods in image fusion[C]//Proceedings of the 12th International Conference on Information Fusion. Seattle, WA, USA: IEEE, 2009: 1409-1416.

|

| [39] |

GARZELLI A, NENCINI F, CAPOBIANCO L. Optimal MMSE pan sharpening of very high resolution multispectral images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2008, 46(1): 228-236. DOI:10.1109/TGRS.2007.907604 |

| [40] |

CHOI J, YU K, KIM Y. A new adaptive component-substitution-based satellite image fusion by using partial replacement[J]. IEEE Transactions on Geoscience and Remote Sensing, 2011, 49(1): 295-309. DOI:10.1109/TGRS.2010.2051674 |

| [41] |

OTAZU X, GONZÁLEZ-AUDÍCANA M, FORS O, et al. Introduction of sensor spectral response into image fusion methods application to wavelet-based methods[J]. IEEE Transactions on Geoscience and Remote Sensing, 2005, 43(10): 2376-2385. DOI:10.1109/TGRS.2005.856106 |

| [42] |

AMOLINS K, ZHANG Yun, DARE P. Wavelet based image fusion techniques — an introduction, review and comparison[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2007, 62(4): 249-263. DOI:10.1016/j.isprsjprs.2007.05.009 |

| [43] |

AIAZZI B, ALPARONE L, BARONTI S, et al. MTF-tailored multiscale fusion of high-resolution MS and Pan imagery[J]. Photogrammetric Engineering & Remote Sensing, 2006, 72(5): 591-596. |

| [44] |

VIVONE G, RESTAINO R, CHANUSSOT J. Full scale regression-based injection coefficients for panchromatic sharpening[J]. IEEE Transactions on Image Processing, 2018, 27(7): 3418-3431. DOI:10.1109/TIP.2018.2819501 |

| [45] |

BALLESTER C, CASELLES V, IGUAL L, et al. A variational model for P+ XS image fusion[J]. International Journal of Computer Vision, 2006, 69(1): 43-58. DOI:10.1007/s11263-006-6852-x |

| [46] |

FU Xueyang, LIN Zihuang, HUANG Yue, et al. A variational pan-sharpening with local gradient constraints[C]//Proceedings of 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, CA, USA: IEEE, 2019: 10265-10274.

|

| [47] |

ZHANG Liangpei, SHEN Huanfeng, GONG Wei, et al. Adjustable model-based fusion method for multispectral and panchromatic images[J]. IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), 2012, 42(6): 1693-1704. DOI:10.1109/TSMCB.2012.2198810 |

| [48] |

孟祥超, 沈焕锋, 张洪艳, 等. 基于梯度一致性约束的多光谱/全色影像最大后验融合方法[J]. 光谱学与光谱分析, 2014, 34(5): 1332-1337. MENG Xiangchao, SHEN Huanfeng, ZHANG Hongyan, et al. Maximum a posteriori fusion method based on gradient consistency constraint for multispectral/panchromatic remote sensing images[J]. Spectroscopy and Spectral Analysis, 2014, 34(5): 1332-1337. DOI:10.3964/j.issn.1000-0593(2014)05-1332-06 |

| [49] |

JIANG Cheng, ZHANG Hongyan, SHEN Huanfeng, et al. A practical compressed sensing-based pan-sharpening method[J]. IEEE Geoscience and Remote Sensing Letters, 2012, 9(4): 629-633. DOI:10.1109/LGRS.2011.2177063 |

| [50] |

LI Shutao, YANG Bin. A new pan-sharpening method using a compressed sensing technique[J]. IEEE Transactions on Geoscience and Remote Sensing, 2011, 49(2): 738-746. DOI:10.1109/TGRS.2010.2067219 |

| [51] |

KIM Y, LEE C, HAN D, et al. Improved additive-wavelet image fusion[J]. IEEE Geoscience and Remote Sensing Letters, 2011, 8(2): 263-267. DOI:10.1109/LGRS.2010.2067192 |

| [52] |

VIVONE G, ALPARONE L, GARZELLI A, et al. Fast reproducible pansharpening based on instrument and acquisition modeling: AWLP revisited[J]. Remote Sensing, 2019, 11(19): 2315. DOI:10.3390/rs11192315 |

| [53] |

JAVAN F D, SAMADZADEGAN F, MEHRAVAR S, et al. A review of image fusion techniques for pan-sharpening of high-resolution satellite imagery[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2021, 171: 101-117. DOI:10.1016/j.isprsjprs.2020.11.001 |

| [54] |

CHENG Ming, WANG Cheng, LI J. Sparse representation based pansharpening using trained dictionary[J]. IEEE Geoscience and Remote Sensing Letters, 2014, 11(1): 293-297. DOI:10.1109/LGRS.2013.2256875 |

| [55] |

ZHU Xiaoxiang, GROHNFELDT C, BAMLER R. Exploiting joint sparsity for pansharpening: the J-SparseFI algorithm[J]. IEEE Transactions on Geoscience and Remote Sensing, 2016, 54(5): 2664-2681. DOI:10.1109/TGRS.2015.2504261 |

| [56] |

HUANG Wei, XIAO Liang, WEI Zhihui, et al. A new pan-sharpening method with deep neural networks[J]. IEEE Geoscience and Remote Sensing Letters, 2015, 12(5): 1037-1041. DOI:10.1109/LGRS.2014.2376034 |

| [57] |

MASI G, COZZOLINO D, VERDOLIVA L, et al. Pansharpening by convolutional neural networks[J]. Remote Sensing, 2016, 8(7): 594. DOI:10.3390/rs8070594 |

| [58] |

RAO Yizhou, HE Lin, ZHU Jiawei. A residual convolutional neural network for pan-shaprening[C]//Proceedings of 2017 International Workshop on Remote Sensing with Intelligent Processing (RSIP). Shanghai, China: IEEE, 2017: 1-4.

|

| [59] |

WEI Yancong, YUAN Qiangqiang, SHEN Huanfeng, et al. Boosting the accuracy of multispectral image pansharpening by learning a deep residual network[J]. IEEE Geoscience and Remote Sensing Letters, 2017, 14(10): 1795-1799. DOI:10.1109/LGRS.2017.2736020 |

| [60] |

YANG Junfeng, FU Xueyang, HU Yuwen, et al. PanNet: a deep network architecture for pan-sharpening[C]//Proceedings of 2017 IEEE International Conference on Computer Vision. Venice, Italy: IEEE, 2017: 5449-5457.

|

| [61] |

LIU Xiangyu, WANG Yunhong, LIU Qingjie. Psgan: a generative adversarial network for remote sensing image pan-sharpening[C]//Proceedings of the 25th IEEE International Conference on Image Processing (ICIP). Athens, GREECE: IEEE, 2018: 873-877.

|

| [62] |

SHEN Huanfeng, JIANG Menghui, LI Jie, et al. Spatial-spectral fusion by combining deep learning and variational model[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(8): 6169-6181. DOI:10.1109/TGRS.2019.2904659 |

| [63] |

JIANG Menghui, SHEN Huanfeng, LI Jie, et al. A differential information residual convolutional neural network for pansharpening[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2020, 163: 257-271. DOI:10.1016/j.isprsjprs.2020.03.006 |

| [64] |

XING Yinghui, YANG Shuyuan, FENG Zhixi, et al. Dual-collaborative fusion model for multispectral and panchromatic image fusion[J]. IEEE Transactions on Geoscience and Remote Sensing, 2020, 60: 5400215. |

| [65] |

ZHOU Man, FU Xueyang, HUANG Jie, et al. Effective pan-sharpening with transformer and invertible neural network[J]. IEEE Transactions on Geoscience and Remote Sensing, 2021, 60: 5406815. |

| [66] |

CETIN M, MUSAOGLU N. Merging hyperspectral and panchromatic image data: qualitative and quantitative analysis[J]. International Journal of Remote Sensing, 2009, 30(7): 1779-1804. DOI:10.1080/01431160802639525 |

| [67] |

DIAN Renwei, LI Shutao, GUO Anjing, et al. Deep hyperspectral image sharpening[J]. IEEE Transactions on Neural Networks and Learning Systems, 2018, 29(11): 5345-5355. DOI:10.1109/TNNLS.2018.2798162 |

| [68] |

LICCIARDI G A, KHAN M M, CHANUSSOT J, et al. Fusion of hyperspectral and panchromatic images using multiresolution analysis and nonlinear PCA band reduction[J]. EURASIP Journal on Advances in Signal Processing, 2012, 2012(1): 207. DOI:10.1186/1687-6180-2012-207 |

| [69] |

GOMEZ R B, JAZAERI A, KAFATOS M. Wavelet-based hyperspectral and multispectral image fusion[C]//Proceedings of 2001 SPIE 4383, Geo-Spatial Image and Data Exploitation Ⅱ. Orlando, FL, USA: SPIE, 2001: 36-42.

|

| [70] |

CHEN Zhao, PU Hanye, WANG Bin, et al. Fusion of hyperspectral and multispectral images: a novel framework based on generalization of pan-sharpening methods[J]. IEEE Geoscience and Remote Sensing Letters, 2014, 11(8): 1418-1422. DOI:10.1109/LGRS.2013.2294476 |

| [71] |

WEI Qi, BIOUCAS-DIAS J, DOBIGEON N, et al. Hyperspectral and multispectral image fusion based on a sparse representation[J]. IEEE Transactions on Geoscience and Remote Sensing, 2015, 53(7): 3658-3668. DOI:10.1109/TGRS.2014.2381272 |

| [72] |

YOKOYA N, GROHNFELDT C, CHANUSSOT J. Hyperspectral and multispectral data fusion: a comparative review of the recent literature[J]. IEEE Geoscience and Remote Sensing Magazine, 2017, 5(2): 29-56. DOI:10.1109/MGRS.2016.2637824 |

| [73] |

PALSSON F, SVEINSSON J R, ULFARSSON M O, et al. Model-based fusion of multi-and hyperspectral images using PCA and wavelets[J]. IEEE Transactions on Geoscience and Remote Sensing, 2015, 53(5): 2652-2663. DOI:10.1109/TGRS.2014.2363477 |

| [74] |

HU Jinfan, HUANG Tingzhu, DENG Liangjian, et al. Hyperspectral image super-resolution via deep spatiospectral attention convolutional neural networks[J]. IEEE Transactions on Neural Networks and Learning Systems, 2021(99): 1-15. |

| [75] |

XIE Qi, ZHOU Minghao, ZHAO Qian, et al. MHF-Net: an interpretable deep network for multispectral and hyperspectral image fusion[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(3): 1457-1473. DOI:10.1109/TPAMI.2020.3015691 |

| [76] |

MEI Shaohui, YUAN Xin, JI Jingyu, et al. Hyperspectral image spatial super-resolution via 3D full convolutional neural network[J]. Remote Sensing, 2017, 9(11): 1139. DOI:10.3390/rs9111139 |

| [77] |

LI Kaiyan, XIE Weiying, DU Qian, et al. DDLPS: detail-based deep laplacian pansharpening for hyperspectral imagery[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(10): 8011-8025. DOI:10.1109/TGRS.2019.2917759 |

| [78] |

WU Penghai, SHEN Huanfeng, ZHANG Liangpei, et al. Integrated fusion of multi-scale polar-orbiting and geostationary satellite observations for the mapping of high spatial and temporal resolution land surface temperature[J]. Remote Sensing of Environment, 2015, 156: 169-181. DOI:10.1016/j.rse.2014.09.013 |

| [79] |

WU Mingquan, HUANG Wenjiang, NIU Zheng, et al. Generating daily synthetic Landsat imagery by combining Landsat and MODIS data[J]. Sensors, 2015, 15(9): 24002-24025. DOI:10.3390/s150924002 |

| [80] |

LIU Xun, DENG Chenwei, CHANUSSOT J, et al. StfNet: a two-stream convolutional neural network for spatiotemporal image fusion[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(9): 6552-6564. DOI:10.1109/TGRS.2019.2907310 |

| [81] |

SHEN Huanfeng, MENG Xiangchao, ZHANG Liangpei. An integrated framework for the spatio-temporal-spectral fusion of remote sensing images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2016, 54(12): 7135-7148. DOI:10.1109/TGRS.2016.2596290 |

| [82] |

GAO Feng, MASEK J, SCHWALLER M, et al. On the blending of the Landsat and MODIS surface reflectance: predicting daily Landsat surface reflectance[J]. IEEE Transactions on Geoscience and Remote Sensing, 2006, 44(8): 2207-2218. DOI:10.1109/TGRS.2006.872081 |

| [83] |

HILKER T, WULDER M A, COOPS N C, et al. A new data fusion model for high spatial-and temporal-resolution mapping of forest disturbance based on Landsat and MODIS[J]. Remote Sensing of Environment, 2009, 113(8): 1613-1627. DOI:10.1016/j.rse.2009.03.007 |

| [84] |

ZHU Xiaolin, CHEN Jin, GAO Feng, et al. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions[J]. Remote Sensing of Environment, 2010, 114(11): 2610-2623. DOI:10.1016/j.rse.2010.05.032 |

| [85] |

WANG Qunming, ZHANG Yihang, ONOJEGHUO A O, et al. Enhancing spatio-temporal fusion of MODIS and Landsat data by incorporating 250 m MODIS data[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2017, 10(9): 4116-4123. DOI:10.1109/JSTARS.2017.2701643 |

| [86] |

HUANG Bo, WANG Juan, SONG Huihui, et al. Generating high spatiotemporal resolution land surface temperature for urban heat island monitoring[J]. IEEE Geoscience and Remote Sensing Letters, 2013, 10(5): 1011-1015. DOI:10.1109/LGRS.2012.2227930 |

| [87] |

XIA Haiping, CHEN Yunhao, LI Ying, et al. Combining kernel-driven and fusion-based methods to generate daily high-spatial-resolution land surface temperatures[J]. Remote Sensing of Environment, 2019, 224: 259-274. DOI:10.1016/j.rse.2019.02.006 |

| [88] |

CHENG Qing, LIU Huiqing, SHEN Huanfeng, et al. A spatial and temporal nonlocal filter-based data fusion method[J]. IEEE Transactions on Geoscience and Remote Sensing, 2017, 55(8): 4476-4488. DOI:10.1109/TGRS.2017.2692802 |

| [89] |

HAZAYMEH K, HASSAN Q K. Spatiotemporal image-fusion model for enhancing the temporal resolution of Landsat-8 surface reflectance images using MODIS images[J]. Journal of Applied Remote Sensing, 2015, 9(1): 096095. DOI:10.1117/1.JRS.9.096095 |

| [90] |

ZHUKOV B, OERTEL D, LANZL F, et al. Unmixing-based multisensor multiresolution image fusion[J]. IEEE Transactions on Geoscience and Remote Sensing, 1999, 37(3): 1212-1226. DOI:10.1109/36.763276 |

| [91] |

ZURITA-MILLA R, CLEVERS J G P W, SCHAEPMAN M E. Unmixing-based Landsat TM and MERIS FR data fusion[J]. IEEE Geoscience and Remote Sensing Letters, 2008, 5(3): 453-457. DOI:10.1109/LGRS.2008.919685 |

| [92] |

MASELLI F, REMBOLD F. Integration of LAC and GAC NDVI data to improve vegetation monitoring in semi-arid environments[J]. International Journal of Remote Sensing, 2002, 23(12): 2475-2488. DOI:10.1080/01431160110104755 |

| [93] |

ZHANG Wei, LI Ainong, JIN Huaan, et al. An enhanced spatial and temporal data fusion model for fusing Landsat and MODIS surface reflectance to generate high temporal Landsat-like data[J]. Remote Sensing, 2013, 5(10): 5346-5368. DOI:10.3390/rs5105346 |

| [94] |

HUANG Bo, ZHANG Hankui. Spatio-temporal reflectance fusion via unmixing: accounting for both phenological and land-cover changes[J]. International Journal of Remote Sensing, 2014, 35(16): 6213-6233. DOI:10.1080/01431161.2014.951097 |

| [95] |

XU Yong, HUANG Bo, XU Yuyue, et al. Spatial and temporal image fusion via regularized spatial unmixing[J]. IEEE Geoscience and Remote Sensing Letters, 2015, 12(6): 1362-1366. DOI:10.1109/LGRS.2015.2402644 |

| [96] |

MIZUOCHI H, HIYAMA T, OHTA T, et al. Development and evaluation of a lookup-table-based approach to data fusion for seasonal wetlands monitoring: an integrated use of AMSR series, MODIS, and Landsat[J]. Remote Sensing of Environment, 2017, 199: 370-388. DOI:10.1016/j.rse.2017.07.026 |

| [97] |

HUANG Bo, SONG Huihui. Spatiotemporal reflectance fusion via sparse representation[J]. IEEE Transactions on Geoscience and Remote Sensing, 2012, 50(10): 3707-3716. DOI:10.1109/TGRS.2012.2186638 |

| [98] |

SONG Huihui, HUANG Bo. Spatiotemporal satellite image fusion through one-pair image learning[J]. IEEE Transactions on Geoscience and Remote Sensing, 2013, 51(4): 1883-1896. DOI:10.1109/TGRS.2012.2213095 |

| [99] |

ZHAO Chongyue, GAO Xinbo, EMERY W J, et al. An integrated spatio-spectral-temporal sparse representation method for fusing remote-sensing images with different resolutions[J]. IEEE Transactions on Geoscience and Remote Sensing, 2018, 56(6): 3358-3370. DOI:10.1109/TGRS.2018.2798663 |

| [100] |

LIU Xun, DENG Chenwei, WANG Shuigen, et al. Fast and accurate spatiotemporal fusion based upon extreme learning machine[J]. IEEE Geoscience and Remote Sensing Letters, 2016, 13(12): 2039-2043. DOI:10.1109/LGRS.2016.2622726 |

| [101] |

TAN Zhenyu, YUE Peng, DI Liping, et al. Deriving high spatiotemporal remote sensing images using deep convolutional network[J]. Remote Sensing, 2018, 10(7): 1066. DOI:10.3390/rs10071066 |

| [102] |

LI Aihua, BO Yanchen, ZHU Yuxin, et al. Blending multi-resolution satellite sea surface temperature (SST) products using Bayesian maximum entropy method[J]. Remote Sensing of Environment, 2013, 135: 52-63. DOI:10.1016/j.rse.2013.03.021 |

| [103] |

HUANG Bo, ZHANG Hankui, SONG Huihui, et al. Unified fusion of remote-sensing imagery: generating simultaneously high-resolution synthetic spatial-temporal-spectral earth observations[J]. Remote Sensing Letters, 2013, 4(6): 561-569. DOI:10.1080/2150704X.2013.769283 |

| [104] |

ECKARDT R, BERGER C, THIEL C, et al. Removal of optically thick clouds from multi-spectral satellite images using multi-frequency SAR data[J]. Remote Sensing, 2013, 5(6): 2973-3006. DOI:10.3390/rs5062973 |

| [105] |

HUANG Bo, LI Ying, HAN Xiaoyu, et al. Cloud removal from optical satellite imagery with SAR imagery using sparse representation[J]. IEEE Geoscience and Remote Sensing Letters, 2015, 12(5): 1046-1050. DOI:10.1109/LGRS.2014.2377476 |

| [106] |

BERMUDEZ J D, HAPP P N, OLIVEIRA D A B, et al. SAR to optical image synthesis for cloud removal with generative adversarial networks[C]//Proceedings of 2018 ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences. Karlsruhe, Germany: ISPRS, 2018.

|

| [107] |

GAO Jianhao, YUAN Qiangqiang, LI Jie, et al. Cloud removal with fusion of high resolution optical and SAR images using generative adversarial networks[J]. Remote Sensing, 2020, 12(1): 191. DOI:10.3390/rs12010191 |

| [108] |

TURNES J N, CASTRO J D B, TORRES D L, et al. Atrous cGAN for SAR to optical image translation[J]. IEEE Geoscience and Remote Sensing Letters, 2020, 19: 4003905. |

| [109] |

HE Wei, YOKOYA N. Multi-temporal sentinel-1 and-2 data fusion for optical image simulation[J]. ISPRS International Journal of Geo-Information, 2018, 7(10): 389. DOI:10.3390/ijgi7100389 |

| [110] |

BERMUDEZ J D, HAPP P N, FEITOSA R Q, et al. Synthesis of multispectral optical images from SAR/optical multitemporal data using conditional generative adversarial networks[J]. IEEE Geoscience and Remote Sensing Letters, 2019, 16(8): 1220-1224. DOI:10.1109/LGRS.2019.2894734 |

| [111] |

XIA Yu, ZHANG Hongyan, ZHANG Liangpei, et al. Cloud removal of optical remote sensing imagery with multitemporal SAR-optical data using X-mtgan[C]//Proceedings of 2019 IEEE International Geoscience and Remote Sensing Symposium. Yokohama, Japan: IEEE, 2019: 3396-3399.

|

| [112] |

SCHMITT M, HUGHES L H, ZHU X X. The SEN1-2 dataset for deep learning in SAR-optical data fusion[C]//Proceedings of 2018 ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences. Karlsruhe, Germany: ISPRS, 2018.

|

| [113] |

LI Wenbo, LI Ying, CHAN J C W. Thick cloud removal with optical and SAR imagery via convolutional-mapping-deconvolutional network[J]. IEEE Transactions on Geoscience and Remote Sensing, 2020, 58(4): 2865-2879. DOI:10.1109/TGRS.2019.2956959 |

| [114] |

MERANER A, EBEL P, ZHU Xiaoxiang, et al. Cloud removal in Sentinel-2 imagery using a deep residual neural network and SAR-optical data fusion[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2020, 166: 333-346. DOI:10.1016/j.isprsjprs.2020.05.013 |

| [115] |

LAURIN G V, LIESENBERG V, CHEN Qi, et al. Optical and SAR sensor synergies for forest and land cover mapping in a tropical site in West Africa[J]. International Journal of Applied Earth Observation and Geoinformation, 2013, 21: 7-16. DOI:10.1016/j.jag.2012.08.002 |

| [116] |

HONG Gang, ZHANG Aining, ZHOU Fuqun, et al. Integration of optical and synthetic aperture radar (SAR) images to differentiate grassland and alfalfa in Prairie area[J]. International Journal of Applied Earth Observation and Geoinformation, 2014, 28: 12-19. DOI:10.1016/j.jag.2013.10.003 |

| [117] |

INGLADA J, VINCENT A, ARIAS M, et al. Improved early crop type identification by joint use of high temporal resolution SAR and optical image time series[J]. Remote Sensing, 2016, 8(5): 362. DOI:10.3390/rs8050362 |

| [118] |

SUKAWATTANAVIJIT C, CHEN Jie, ZHANG Hongsheng. GA-SVM algorithm for improving land-cover classification using SAR and optical remote sensing data[J]. IEEE Geoscience and Remote Sensing Letters, 2017, 14(3): 284-288. DOI:10.1109/LGRS.2016.2628406 |

| [119] |

IRWIN K, BEAULNE D, BRAUN A, et al. Fusion of SAR, optical imagery and airborne LiDAR for surface water detection[J]. Remote Sensing, 2017, 9(9): 890. DOI:10.3390/rs9090890 |

| [120] |

SHAO Zhenfeng, ZHANG Linjing, WANG Lei. Stacked sparse autoencoder modeling using the synergy of airborne LiDAR and satellite optical and SAR data to map forest above-ground biomass[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2017, 10(12): 5569-5582. DOI:10.1109/JSTARS.2017.2748341 |

| [121] |

LIU Shengjie, QI Zhixin, LI Xia, et al. Integration of convolutional neural networks and object-based post-classification refinement for land use and land cover mapping with optical and SAR data[J]. Remote Sensing, 2019, 11(6): 690. DOI:10.3390/rs11060690 |

| [122] |

ZHANG Puzhao, BAN Yifang, NASCETTI A. Learning U-Net without forgetting for near real-time wildfire monitoring by the fusion of SAR and optical time series[J]. Remote Sensing of Environment, 2021, 261: 112467. DOI:10.1016/j.rse.2021.112467 |

| [123] |

JIANG Xiao, LI Gang, LIU Yu, et al. Change detection in heterogeneous optical and SAR remote sensing images via deep homogeneous feature fusion[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2020, 13: 1551-1566. DOI:10.1109/JSTARS.2020.2983993 |

| [124] |

CERVONE G, SAVA E, HUANG Qunying, et al. Using twitter for tasking remote-sensing data collection and damage assessment: 2013 Boulder flood case study[J]. International Journal of Remote Sensing, 2016, 37(1): 100-124. DOI:10.1080/01431161.2015.1117684 |

| [125] |

ROSSER J F, LEIBOVICI D G, JACKSON M J. Rapid flood inundation mapping using social media, remote sensing and topographic data[J]. Natural Hazards, 2017, 87(1): 103-120. DOI:10.1007/s11069-017-2755-0 |

| [126] |

HUANG Qunying, CERVONE G, ZHANG Guiming. A cloud-enabled automatic disaster analysis system of multi-sourced data streams: an example synthesizing social media, remote sensing and Wikipedia data[J]. Computers, Environment and Urban Systems, 2017, 66: 23-37. DOI:10.1016/j.compenvurbsys.2017.06.004 |

| [127] |

CHI Mingmin, SUN Zhongyi, QIN Yiqing, et al. A novel methodology to label urban remote sensing images based on location-based social media photos[J]. Proceedings of the IEEE, 2017, 105(10): 1926-1936. DOI:10.1109/JPROC.2017.2730585 |

| [128] |

LIU Xiaoping, HE Jialv, YAO Yao, et al. Classifying urban land use by integrating remote sensing and social media data[J]. International Journal of Geographical Information Science, 2017, 31(8): 1675-1696. DOI:10.1080/13658816.2017.1324976 |

| [129] |

QIN Yiqing, CHI Mingmin, LIU Xuan, et al. Classification of high resolution urban remote sensing images using deep networks by integration of social media photos[C]//Proceedings of 2018 IEEE International Geoscience and Remote Sensing Symposium. Valencia, Spain: IEEE, 2018: 7243-7246.

|

| [130] |

SHI Yan, QI Zhixin, LIU Xiaoping, et al. Urban land use and land cover classification using multisource remote sensing images and social media data[J]. Remote Sensing, 2019, 11(22): 2719. DOI:10.3390/rs11222719 |

| [131] |

CAO Rui, TU Wei, YANG Cuixin, et al. Deep learning-based remote and social sensing data fusion for urban region function recognition[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2020, 163: 82-97. DOI:10.1016/j.isprsjprs.2020.02.014 |

| [132] |

TU Wei, ZHANG Yatao, LI Qingquan, et al. Scale effect on fusing remote sensing and human sensing to portray urban functions[J]. IEEE Geoscience and Remote Sensing Letters, 2021, 18(1): 38-42. DOI:10.1109/LGRS.2020.2965247 |

| [133] |

MA Ting. Multi-level relationships between satellite-derived nighttime lighting signals and social media-derived human population dynamics[J]. Remote Sensing, 2018, 10(7): 1128. DOI:10.3390/rs10071128 |

| [134] |

ZHAO Naizhuo, CAO Guofeng, ZHANG Wei, et al. Remote sensing and social sensing for socioeconomic systems: a comparison study between nighttime lights and location-based social media at the 500 m spatial resolution[J]. International Journal of Applied Earth Observation and Geoinformation, 2020, 87: 102058. DOI:10.1016/j.jag.2020.102058 |

| [135] |

李小文. 定量遥感的发展与创新[J]. 河南大学学报(自然科学版), 2005, 35(4): 49-56. LI Xiaowen. Retrospect, prospect and innovation in quantitative remote sensing[J]. Journal of Henan University (Natural Science), 2005, 35(4): 49-56. DOI:10.3969/j.issn.1003-4978.2005.04.012 |

| [136] |

岳林蔚, 沈焕锋, 袁强强, 等. 基于深度置信网络的多源DEM点面融合模型[J]. 武汉大学学报(信息科学版), 2021, 46(7): 1090-1097. YUE Linwei, SHEN Huanfeng, YUAN Qiangqiang, et al. A multi-source DEM point-surface fusion model based on deep belief network[J]. Geomatics and Information Science of Wuhan University, 2021, 46(7): 1090-1097. |

| [137] |

LI Tongwen, SHEN Huanfeng, ZENG Chao, et al. Point-surface fusion of station measurements and satellite observations for mapping PM2.5 distribution in China: methods and assessment[J]. Atmospheric Environment, 2017, 152: 477-489. DOI:10.1016/j.atmosenv.2017.01.004 |

| [138] |

李同文. 顾及时空特征的大气PM2.5神经网络遥感反演[D]. 武汉: 武汉大学, 2020. DOI: 10.27379/d.cnki.gwhdu.2020.000817. LI Tongwen. Research on atmospheric PM2.5 neural network remote sensing retrieval considering spatiotemporal characteristics[D]. Wuhan: Wuhan University, 2020. DOI: 10.27379/d.cnki.gwhdu.2020.000817. |

| [139] |

YANG Qianqian, YUAN Qiangqiang, YUE Linwei, et al. Mapping PM2.5 concentration at a sub-km level resolution: a dual-scale retrieval approach[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2020, 165: 140-151. DOI:10.1016/j.isprsjprs.2020.05.018 |

| [140] |

YUAN Qiangqiang, SHEN Huanfeng, LI Tongwen, et al. Deep learning in environmental remote sensing: achievements and challenges[J]. Remote Sensing of Environment, 2020, 241: 111716. DOI:10.1016/j.rse.2020.111716 |

| [141] |

杨倩倩, 靳才溢, 李同文, 等. 数据驱动的定量遥感研究进展与挑战[J]. 遥感学报, 2022, 26(2): 268-285. YANG Qianqian, JIN Caiyi, LI Tongwen, et al. Research progress and challenges of data-driven quantitative remote sensing[J]. National Remote Sensing Bulletin, 2022, 26(2): 268-285. |

| [142] |

MCCOLL K A, ALEMOHAMMAD S H, AKBAR R, et al. The global distribution and dynamics of surface soil moisture[J]. Nature Geoscience, 2017, 10(2): 100-104. DOI:10.1038/ngeo2868 |

| [143] |

VITERBO P, BETTS A K. Impact of the ECMWF reanalysis soil water on forecasts of the July 1993 Mississippi flood[J]. Journal of Geophysical Research: Atmospheres, 1999, 104(D16): 19361-19366. DOI:10.1029/1999JD900449 |

| [144] |

TRENBERTH K E, GUILLEMOT C J. Evaluation of the global atmospheric moisture budget as seen from analyses[J]. Journal of Climate, 1995, 8(9): 2255-2272. DOI:10.1175/1520-0442(1995)008<2255:EOTGAM>2.0.CO;2 |

| [145] |

BAGHDADI N, GAULTIER S, KING C. Retrieving surface roughness and soil moisture from synthetic aperture radar (SAR) data using neural networks[J]. Canadian Journal of Remote Sensing, 2002, 28(5): 701-711. DOI:10.5589/m02-066 |

| [146] |

SANTI E, PETTINATO S, PALOSCIA S, et al. An algorithm for generating soil moisture and snow depth maps from microwave spaceborne radiometers: HydroAlgo[J]. Hydrology and Earth System Sciences, 2012, 16(10): 3659-3676. DOI:10.5194/hess-16-3659-2012 |

| [147] |

AIRES F, PRIGENT C, ROSSOW W B. Sensitivity of satellite microwave and infrared observations to soil moisture at a global scale: 2. Global statistical relationships[J]. Journal of Geophysical Research: Atmospheres, 2005, 110(D11): D11103. DOI:10.1029/2004JD005094 |

| [148] |

EROGLU O, KURUM M, BOYD D, et al. High spatio-temporal resolution CYGNSS soil moisture estimates using artificial neural networks[J]. Remote Sensing, 2019, 11(19): 2272. DOI:10.3390/rs11192272 |

| [149] |

SONG Xiaodong, ZHANG Ganlin, LIU Feng, et al. Modeling spatiotemporal distribution of soil moisture by deep learning-based cellular automata model[J]. Journal of Arid Land, 2016, 8(5): 734-748. DOI:10.1007/s40333-016-0049-0 |

| [150] |

FANG Kuai, SHEN Chaopeng, KIFER D, et al. Prolongation of SMAP to spatiotemporally seamless coverage of continental U.S. using a deep learning neural network[J]. Geophysical Research Letters, 2017, 44(21): 11030-11039. |

| [151] |

HEGAZI E H, YANG Lingbo, HUANG Jingfeng. A convolutional neural network algorithm for soil moisture prediction from Sentinel-1 SAR images[J]. Remote Sensing, 2021, 13(24): 4964. DOI:10.3390/rs13244964 |

| [152] |

YUAN Qiangqiang, XU Hongzhang, LI Tongwen, et al. Estimating surface soil moisture from satellite observations using a generalized regression neural network trained on sparse ground-based measurements in the continental US[J]. Journal of Hydrology, 2020, 580: 124351. DOI:10.1016/j.jhydrol.2019.124351 |

| [153] |

ZHANG Yufang, LIANG Shunlin, ZHU Zhiliang, et al. Soil moisture content retrieval from Landsat 8 data using ensemble learning[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2022, 185: 32-47. DOI:10.1016/j.isprsjprs.2022.01.005 |

| [154] |

曹梅盛, 李培基, ROBINSON D A, 等. 中国西部积雪SMMR微波遥感的评价与初步应用[J]. 环境遥感, 1993, 8(4): 260-269. CAO Meisheng, LI Peiji, ROBINSON D A, et al. Evaluation and primary application of microwave remote sensing SMMR-derived snow cover in western China[J]. Remote Sensing of Environment, 1993, 8(4): 260-269. |

| [155] |

ROBINSON D A, DEWEY K F, HEIM R R JR. Global snow cover monitoring: an update[J]. Bulletin of the American Meteorological Society, 1993, 74(9): 1689-1696. DOI:10.1175/1520-0477(1993)074<1689:GSCMAU>2.0.CO;2 |

| [156] |

TEDESCO M, PULLIAINEN J, TAKALA M, et al. Artificial neural network-based techniques for the retrieval of SWE and snow depth from SSM/I data[J]. Remote Sensing of Environment, 2004, 90(1): 76-85. DOI:10.1016/j.rse.2003.12.002 |

| [157] |

CAO Yungang, YANG Xiuchun, ZHU Xiaohua. Retrieval snow depth by artificial neural network methodology from integrated AMSR-E and in-situ data—a case study in Qinghai-Tibet Plateau[J]. Chinese Geographical Science, 2008, 18(4): 356-360. DOI:10.1007/s11769-008-0356-2 |

| [158] |

FORMAN B A, REICHLE R H, DERKSEN C. Estimating passive microwave brightness temperature over snow-covered land in North America using a land surface model and an artificial neural network[J]. IEEE Transactions on Geoscience and Remote Sensing, 2014, 52(1): 235-248. DOI:10.1109/TGRS.2013.2237913 |

| [159] |

EVORA N D, TAPSOBA D, DE SEVE D. Combining artificial neural network models, geostatistics, and passive microwave data for snow water equivalent retrieval and mapping[J]. IEEE Transactions on Geoscience and Remote Sensing, 2008, 46(7): 1925-1939. DOI:10.1109/TGRS.2008.916632 |

| [160] |

DARIANE A B, AZIMI S, ZAKERINEJAD A. Artificial neural network coupled with wavelet transform for estimating snow water equivalent using passive microwave data[J]. Journal of Earth System Science, 2014, 123(7): 1591-1601. DOI:10.1007/s12040-014-0485-1 |

| [161] |

BAIR E H, CALFA A A, RITTGER K, et al. Using machine learning for real-time estimates of snow water equivalent in the watersheds of Afghanistan[J]. The Cryosphere, 2018, 12(5): 1579-1594. DOI:10.5194/tc-12-1579-2018 |

| [162] |

WANG Jiwen, YUAN Qiangqiang, SHEN Huanfeng, et al. Estimating snow depth by combining satellite data and ground-based observations over Alaska: a deep learning approach[J]. Journal of Hydrology, 2020, 585: 124828. DOI:10.1016/j.jhydrol.2020.124828 |

| [163] |

YANG Jianwei, JIANG Lingmei, LUOJUS K, et al. Snow depth estimation and historical data reconstruction over China based on a random forest machine learning approach[J]. The Cryosphere, 2020, 14(6): 1763-1778. DOI:10.5194/tc-14-1763-2020 |

| [164] |

XU Xiaocong, LIU Xiaoping, LI Xia, et al. Global snow depth retrieval from passive microwave brightness temperature with machine learning approach[J]. IEEE Transactions on Geoscience and Remote Sensing, 2021, 60: 4302917. |

| [165] |

XIAO Xiongxin, ZHANG Tingjun, ZHONG Xinyue, et al. Support vector regression snow-depth retrieval algorithm using passive microwave remote sensing data[J]. Remote Sensing of Environment, 2018, 210: 48-64. DOI:10.1016/j.rse.2018.03.008 |

| [166] |

HU Yanxing, CHE Tao, DAI Liyun, et al. Snow depth fusion based on machine learning methods for the northern hemisphere[J]. Remote Sensing, 2021, 13(7): 1250. DOI:10.3390/rs13071250 |

| [167] |

KIDD C, LEVIZZANI V. Status of satellite precipitation retrievals[J]. Hydrology and Earth System Sciences, 2011, 15(4): 1109-1116. DOI:10.5194/hess-15-1109-2011 |

| [168] |

SHEN Zhehui, YONG Bin, GOURLEY J J, et al. Real-time bias adjustment for satellite-based precipitation estimates over Mainland China[J]. Journal of Hydrology, 2021, 596: 126133. DOI:10.1016/j.jhydrol.2021.126133 |

| [169] |

PRIGENT C. Precipitation retrieval from space: an overview[J]. Comptes Rendus Geoscience, 2010, 342(4-5): 380-389. DOI:10.1016/j.crte.2010.01.004 |

| [170] |

SUN Qiaohong, MIAO Chiyuan, DUAN Qingyun, et al. A review of global precipitation data sets: data sources, estimation, and intercomparisons[J]. Reviews of Geophysics, 2018, 56(1): 79-107. DOI:10.1002/2017RG000574 |

| [171] |

HU Qingfang, LI Zhe, WANG Leizhi, et al. Rainfall spatial estimations: a review from spatial interpolation to multi-source data merging[J]. Water, 2019, 11(3): 579. DOI:10.3390/w11030579 |

| [172] |

LI Xinyan, YANG Yuanjian, MI Jiaqin, et al. Leveraging machine learning for quantitative precipitation estimation from Fengyun-4 geostationary observations and ground meteorological measurements[J]. Atmospheric Measurement Techniques, 2021, 14(11): 7007-7023. DOI:10.5194/amt-14-7007-2021 |

| [173] |

WANG Cunguang, XU Jing, TANG Guoqiang, et al. Infrared precipitation estimation using convolutional neural network[J]. IEEE Transactions on Geoscience and Remote Sensing, 2020, 58(12): 8612-8625. DOI:10.1109/TGRS.2020.2989183 |

| [174] |

GRAHAM S L, KESSLER P B, MCKUSICK M K. Gprof: a call graph execution profiler[J]. ACM SIGPLAN Notices, 1982, 17(6): 120-126. DOI:10.1145/872726.806987 |

| [175] |

KUMMEROW C, HONG Y, OLSON W S, et al. The evolution of the goddard profiling algorithm (GPROF) for rainfall estimation from passive microwave sensors[J]. Journal of Applied Meteorology, 2001, 40(11): 1801-1820. DOI:10.1175/1520-0450(2001)040<1801:TEOTGP>2.0.CO;2 |

| [176] |

RANDEL D L, KUMMEROW C D, RINGERUD S. The goddard profiling (GPROF) precipitation retrieval algorithm[M]//LEVIZZANI V, KIDD C, KIRSCHBAUM D B, et al. Satellite Precipitation Measurement. Cham: Springer, 2020: 141-152.

|

| [177] |

HUFFMAN G J, BOLVIN D T, NELKIN E J, et al. Integrated multi-satellite retrievals for GPM (IMERG) technical documentation[R]. USA: NASA/GSFC Code, 2015.

|

| [178] |

KUBOTA T, AONASHI K, USHIO T, et al. Global satellite mapping of precipitation (GSMaP) products in the GPM era[M]//LEVIZZANI V, KIDD C, KIRSCHBAUM D B, et al. Satellite Precipitation Measurement. Cham: Springer, 2020: 355-373.

|

| [179] |

KUBOTA T, SHIGE S, HASHIZUME H, et al. Global precipitation map using satellite-borne microwave radiometers by the GSMaP project: production and validation[J]. IEEE Transactions on Geoscience and Remote Sensing, 2007, 45(7): 2259-2275. DOI:10.1109/TGRS.2007.895337 |

| [180] |

USHIO T, SASASHIGE K, KUBOTA T, et al. A Kalman filter approach to the global satellite mapping of precipitation (GSMaP) from combined passive microwave and infrared radiometric data[J]. Journal of the Meteorological Society of Japan. Ser. Ⅱ, 2009, 87A: 137-151. DOI:10.2151/jmsj.87A.137 |

| [181] |

JOYCE R J, JANOWIAK J E, ARKIN P A, et al. CMORPH: a method that produces global precipitation estimates from passive microwave and infrared data at high spatial and temporal resolution[J]. Journal of Hydrometeorology, 2004, 5(3): 487-503. DOI:10.1175/1525-7541(2004)005<0487:CAMTPG>2.0.CO;2 |

| [182] |

JOYCE R J, XIE Pingping. Kalman filter-based CMORPH[J]. Journal of Hydrometeorology, 2011, 12(6): 1547-1563. DOI:10.1175/JHM-D-11-022.1 |

| [183] |

BAEZ-VILLANUEVA O M, ZAMBRANO-BIGIARINI M, BECK H E, et al. RF-MEP: a novel random forest method for merging gridded precipitation products and ground-based measurements[J]. Remote Sensing of Environment, 2020, 239: 111606. DOI:10.1016/j.rse.2019.111606 |

| [184] |

TOPP S N, PAVELSKY T M, JENSEN D, et al. Research trends in the use of remote sensing for inland water quality science: moving towards multidisciplinary applications[J]. Water, 2020, 12(1): 169. DOI:10.3390/w12010169 |

| [185] |

HAFEEZ S, WONG M S, HO H C, et al. Comparison of machine learning algorithms for retrieval of water quality indicators in Case-Ⅱ waters: a case study of Hong Kong[J]. Remote Sensing, 2019, 11(6): 617. DOI:10.3390/rs11060617 |

| [186] |

SHEN Ming, DUAN Hongtao, CAO Zhigang, et al. Sentinel-3 OLCI observations of water clarity in large lakes in eastern China: implications for SDG 6.3.2 evaluation[J]. Remote Sensing of Environment, 2020, 247: 111950. DOI:10.1016/j.rse.2020.111950 |

| [187] |

SAGAN V, PETERSON K T, MAIMAITIJIANG M, et al. Monitoring inland water quality using remote sensing: potential and limitations of spectral indices, bio-optical simulations, machine learning, and cloud computing[J]. Earth-Science Reviews, 2020, 205: 103187. DOI:10.1016/j.earscirev.2020.103187 |

| [188] |

MACIEL D A, BARBOSA C C F, DE MORAES NOVO E M L, et al. Water clarity in Brazilian water assessed using Sentinel-2 and machine learning methods[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2021, 182: 134-152. DOI:10.1016/j.isprsjprs.2021.10.009 |

| [189] |

RUBIN H J, LUTZ D A, STEELE B G, et al. Remote sensing of lake water clarity: performance and transferability of both historical algorithms and machine learning[J]. Remote Sensing, 2021, 13(8): 1434. DOI:10.3390/rs13081434 |

| [190] |

GUO Hongwei, TIAN Shang, HUANG J J, et al. Performance of deep learning in mapping water quality of Lake Simcoe with long-term Landsat archive[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2022, 183: 451-469. DOI:10.1016/j.isprsjprs.2021.11.023 |

| [191] |

GHOLIZADEH M H, MELESSE A M, REDDI L. A comprehensive review on water quality parameters estimation using remote sensing techniques[J]. Sensors, 2016, 16(8): 1298. DOI:10.3390/s16081298 |

| [192] |

ZHI Wei, FENG Dapeng, TSAI W P, et al. From hydrometeorology to river water quality: can a deep learning model predict dissolved oxygen at the continental scale?[J]. Environmental Science & Technology, 2021, 55(4): 2357-2368. |

| [193] |

KOLIOS S, HATZIANASTASSIOU N. Quantitative aerosol optical depth detection during dust outbreaks from meteosat imagery using an artificial neural network model[J]. Remote Sensing, 2019, 11(9): 1022. DOI:10.3390/rs11091022 |

| [194] |

YEOM J M, JEONG S, HA J S, et al. Estimation of the hourly aerosol optical depth from GOCI geostationary satellite data: deep neural network, machine learning, and physical models[J]. IEEE Transactions on Geoscience and Remote Sensing, 2021, 60: 4103612. |

| [195] |

JOHARESTANI M Z, CAO Chunxiang, NI Xiliang, et al. PM2.5 prediction based on random forest, XGBoost, and deep learning using multisource remote sensing data[J]. Atmosphere, 2019, 10(7): 373. DOI:10.3390/atmos10070373 |

| [196] |

LI Tongwen, SHEN Huanfeng, YUAN Qiangqiang, et al. Estimating ground-level PM2.5 by fusing satellite and station observations: a geo-intelligent deep learning approach[J]. Geophysical Research Letters, 2017, 44(23): 11985-11993. DOI:10.1002/2017GL075710 |

| [197] |

LI Tongwen, SHEN Huanfeng, YUAN Qiangqiang, et al. Geographically and temporally weighted neural networks for satellite-based mapping of ground-level PM2.5[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2020, 167: 178-188. DOI:10.1016/j.isprsjprs.2020.06.019 |

| [198] |

LI Rui, CUI Lulu, FU Hongbo, et al. Satellite-based estimation of full-coverage ozone (O3) concentration and health effect assessment across Hainan Island[J]. Journal of Cleaner Production, 2020, 244: 118773. DOI:10.1016/j.jclepro.2019.118773 |

| [199] |

LI Lianfa, WU Jiajie. Spatiotemporal estimation of satellite-borne and ground-level NO2 using full residual deep networks[J]. Remote Sensing of Environment, 2021, 254: 112257. DOI:10.1016/j.rse.2020.112257 |

| [200] |

WANG Yuan, YUAN Qiangqiang, LI Tongwen, et al. Estimating daily full-coverage near surface O3, CO, and NO2 concentrations at a high spatial resolution over China based on S5P-TROPOMI and GEOS-FP[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2021, 175: 311-325. DOI:10.1016/j.isprsjprs.2021.03.018 |

| [201] |

CHOI H, KANG Y, IM J. Estimation of TROPOMI-derived ground-level SO2 concentrations using machine learning over East Asia[J]. Korean Journal of Remote Sensing, 2021, 37(2): 275-290. |

| [202] |

LI Tongwen, CHENG Xiao. Estimating daily full-coverage surface ozone concentration using satellite observations and a spatiotemporally embedded deep learning approach[J]. International Journal of Applied Earth Observation and Geoinformation, 2021, 101: 102356. DOI:10.1016/j.jag.2021.102356 |

| [203] |

WANG Yuan, YUAN Qiangqiang, ZHU Liye, et al. Spatiotemporal estimation of hourly 2 km ground-level ozone over China based on Himawari-8 using a self-adaptive geospatially local model[J]. Geoscience Frontiers, 2022, 13(1): 101286. DOI:10.1016/j.gsf.2021.101286 |

| [204] |

ALEMANY S, BELTRAN J, PEREZ A, et al. Predicting hurricane trajectories using a recurrent neural network[C]//Proceedings of the 33th AAAI Conference on Artificial Intelligence and 31th Innovative Applications of Artificial Intelligence Conference and Ninth AAAI Symposium on Educational Advances in Artificial Intelligence. Honolulu, HI, USA: AAAI Press, 2019: 58.

|

| [205] |

KIM S, KIM H, LEE J, et al. Deep-hurricane-tracker: tracking and forecasting extreme climate events[C]//Proceedings of 2019 IEEE Winter Conference on Applications of Computer Vision (WACV). Waikoloa, HI, USA: IEEE, 2019: 1761-1769.

|

| [206] |

YU Manzhu, HUANG Qunying, QIN Han, et al. Deep learning for real-time social media text classification for situation awareness-using Hurricanes Sandy, Harvey, and Irma as case studies[J]. International Journal of Digital Earth, 2019, 12(11): 1230-1247. DOI:10.1080/17538947.2019.1574316 |

| [207] |

JAIN P, COOGAN S C P, SUBRAMANIAN S G, et al. A review of machine learning applications in wildfire science and management[J]. Environmental Reviews, 2020, 28(4): 478-505. DOI:10.1139/er-2020-0019 |

| [208] |

BOLOORANI A D, SAMANY N N, PAPI R, et al. Dust source susceptibility mapping in Tigris and Euphrates basin using remotely sensed imagery[J]. CATENA, 2022, 209: 105795. DOI:10.1016/j.catena.2021.105795 |

| [209] |

LIN Xin, CHANG Hong, WANG Kaibo, et al. Machine learning for source identification of dust on the Chinese Loess Plateau[J]. Geophysical Research Letters, 2020, 47(21): e2020GL088950. |

| [210] |

GHOLAMI V, SAHOUR H, AMRI M A H. Soil erosion modeling using erosion pins and artificial neural networks[J]. CATENA, 2021, 196: 104902. DOI:10.1016/j.catena.2020.104902 |

| [211] |

RODRIGUES M, DE LA RIVA J. An insight into machine-learning algorithms to model human-caused wildfire occurrence[J]. Environmental Modelling & Software, 2014, 57: 192-201. |

| [212] |

CAICEDO J P R, VERRELST J, MUÑOZ-MARÍ J, et al. Toward a semiautomatic machine learning retrieval of biophysical parameters[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2014, 7(4): 1249-1259. DOI:10.1109/JSTARS.2014.2298752 |

| [213] |

VERRELST J, CAMPS-VALLS G, MUÑOZ-MARÍ J, et al. Optical remote sensing and the retrieval of terrestrial vegetation bio-geophysical properties-A review[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2015, 108: 273-290. DOI:10.1016/j.isprsjprs.2015.05.005 |

| [214] |

VERRELST J, MUÑOZ J, ALONSO L, et al. Machine learning regression algorithms for biophysical parameter retrieval: opportunities for Sentinel-2 and-3[J]. Remote Sensing of Environment, 2012, 118: 127-139. DOI:10.1016/j.rse.2011.11.002 |

| [215] |

SHAH S H, ANGEL Y, HOUBORG R, et al. A random forest machine learning approach for the retrieval of leaf chlorophyll content in wheat[J]. Remote Sensing, 2019, 11(8): 920. DOI:10.3390/rs11080920 |

| [216] |

XIN Qinchuan, LI Jing, LI Ziming, et al. Evaluations and comparisons of rule-based and machine-learning-based methods to retrieve satellite-based vegetation phenology using MODIS and USA National Phenology Network data[J]. International Journal of Applied Earth Observation and Geoinformation, 2020, 93: 102189. DOI:10.1016/j.jag.2020.102189 |

| [217] |

WANG Hongquan, MAGAGI R, GOÏTA K, et al. Crop phenology retrieval via polarimetric SAR decomposition and Random Forest algorithm[J]. Remote Sensing of Environment, 2019, 231: 111234. DOI:10.1016/j.rse.2019.111234 |